AUTHOR: ATHMAKURI NAVEEN KUMAR

DIGITAL PRODUCT INNOVATOR

SENIOR SOFTWARE ENGINEER (FULL STACK DEVELOPER WITH DEVOPS)

athmakuri.naveenkumar@gmail.com

DEVOPS FOR MACHINE LEARNING: ACCELERATING MODEL DEVELOPMENT AND DEPLOYMENT

I am ATHMAKURI NAVEEN KUMAR, as a Full-Stack Developer & Cloud DevOps Engineer having 11 years of solid experience in IT industry and well expertise in developing the robust & secure large scale Enterprise applications of banking sector, healthcare and E-Commerce with asp .net core, .net core blazor, c#, mvc core, react js, angular js, node js, python automation, power-shell scripting, terraform, Linux and well expertise in advanced micro-service deployments by Azure & AWS kubernetes.

I am ATHMAKURI NAVEEN KUMAR, as a Full-Stack Developer & Cloud DevOps Engineer having 11 years of solid experience in IT industry and well expertise in developing the robust & secure large scale Enterprise applications of banking sector, healthcare and E-Commerce with asp .net core, .net core blazor, c#, mvc core, react js, angular js, node js, python automation, power-shell scripting, terraform, Linux and well expertise in advanced micro-service deployments by Azure & AWS kubernetes.

I am very much passionate about writing Research Papers, conducting tech seminars, tech work shops and mentoring to IT companies, engineering students, job seekers and used to implementing AI, ChatGPT for real world IT industry experiences. Innovated development, deployment techniques for large scale Enterprise Applications and also published few technical articles.

Abstract:

MLOps (Machine Learning Operations) have been used by the industry and researchers during the past five or more years in an effort to increase output. The existing MLOps literature is still mostly fragmented and inconsistent. In order to fill this gap, this study uses a mixed-methods approach that includes a literature review, survey questions, and expert interviews. The researcher gives a comprehensive review of the essential guidelines, elements, and responsibilities as well as the resultant architecture and procedures. Additionally, this research defines MLOps and tackles unresolved issues in the area. In order to assist Machine Learning researchers and practitioners who wish to automate and run their Machine Learning products, this study presents an MLOps pipeline to apply product suggestions on the e-commerce platform.

Keywords: MLOps, Machine Learning, Artificial Intelligence, Automation, ML in production.

Machine Learning:

In recent years, machine learning has made considerable advancements, especially in applications for manufacturing. In applications like natural language processing, picture and audio recognition, and self-driving cars, to mention a few, deep learning models have excelled. The use of reinforcement learning to improve robots and industrial processes is another breakthrough. Additionally, explainable AI approaches have been developed to increase the interpretability of ML models, and transfer learning has been utilised to increase the effectiveness of training models on limited datasets. ML has been applied in manufacturing for a variety of purposes, including as supply chain optimisation, predictive maintenance, and quality control. ML in production has also benefited from developments in automated machine learning and distributed computing. While distributed computing enables ML algorithms to scale and manage vast volumes of data, AutoML solutions are meant to streamline ML processes and eliminate the need for user involvement. These advancements are supported by a number of scholarly sources. A Google study shows how scalable and effective machine learning pipelines can be created using Kubernetes and TensorFlow in real-world settings. Another IBM research highlights the benefits of AutoML in cutting down on the time and expense of ML development. Open AI explores current developments in deep learning and their effects on different disciplines, including industrial applications, these examples show how machine learning has significantly impacted commercial applications and the possibility for further development.

Machine Learning Operations (MLOps):

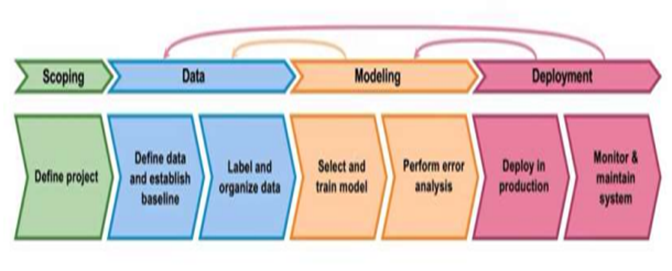

The literature of today shows how academics and industry work together to increase ML output. Model development has gotten a lot of attention during the past 10 years, the academic community has concentrated on developing machine learning models and benchmarking rather than using sophisticated machine learning systems in practical settings. The MLOps fills the current gap between production and development. The model component will be installed and used in the production environment by the ML engineering team, it is time to think about monitoring and maintaining the system without interfering with production after the full ML project or system is running, describe how challenging and expensive it is to maintain ML systems using the technical debt metaphor, this metaphor was first used by Ward Cunningham in 1992 to illustrate the long-term consequences of fast progress in software engineering. It is effective to plan out all the actions that must be taken during the process by thinking through the ML project lifecycle. To assist with the evolution of ML projects through the lifecycle, the MLOps discipline has developed a set of tools and concepts.

Define Project

Define data and establish baseline

Label and organize data

Select and train the model

Perform error analysis

Deploy in Production

Monitor and maintain the system

MLOps Life Cycle

Any ML project’s deployment phase is one of its most thrilling and difficult phases. Deploying ML models is challenging because of problems with both software engineering and machine learning. When deploying an ML system, users must make sure that the system has a way to handle changes like idea drift and data drift on an ongoing production system. When adopting an ML model, however, software engineering faces several challenges, including real-time or batch processing, cloud vs. edge/browser, computing resources (CPU/GPU/memory), latency, throughout (QPS), logging, security, and privacy. The manufacturing system must also operate constantly at the lowest cost while generating the greatest amount of output. MLOps incorporates model governance, business and legal needs, and helps to enhance the quality of production models.

– Automation: Machine learning and software automation are both necessary for achieving targeted business goals. Diverse teams may concentrate on more important business concerns by automating the lifecycle of ML-powered software, leading to quicker and more dependable business solutions.

– Effectiveness: From conception to deployment, MLOps improves the productivity of all production teams and the methodology used to construct machine learning projects.

– Workflow: Each machine learning project involves data scientists team and machine learning engineers developing state-of-the-art models manually or digitally. When choosing an ML model for training, data scientists take into account the model’s complexity and how the model has evolved. Environments used for development and testing are different from those used for staging and production.

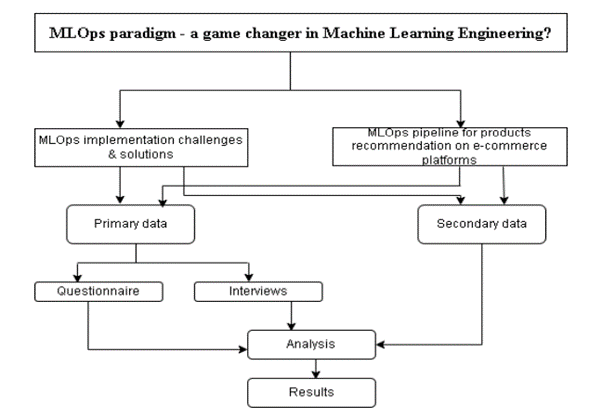

MLOP’s Paradigm:

Data Collection Methodologies:

A literature study, survey, and interviews with experts in ML, data engineering, and software engineering from diverse organisations are all part of the mixed-methods has done in the research process. MLOps engineers, Data Scientists, Data engineers, DevOps engineers, Software engineers, Backend developers, and AI Architects are the individuals targeted. The investigation uncovered the manner in which these interviewees and respondents make sense of MLOps. The research employed professional networks, social media, and online platforms like LinkedIn to target people in the defined field of study interest, which helps to obtain trustworthy feedback, in order to discover more people to conduct interviews and questionnaire responders.

Secondary Data:

The majority of the material in this study was accessed from reliable scholarly resources mostly using the Google Scholar search engine, found a wealth of useful publications, books, and blogs about MLOps by searching for terms like MLOps survey, MLOps machine learning, and MLOps DevOps. To increase the speed and effectiveness of machine learning development and deployment, the multidisciplinary discipline of machine learning operations combines software engineering techniques, data engineering, and machine learning. With research and development attempts to enhance the end-to-end machine learning development process, there has been a growing corpus of literature on MLOps. These initiatives attempt to speed up and streamline model deployment while enhancing the security, reliability, and openness of machine learning systems. Various organisations have created tools and frameworks to aid in the development of MLOps. The MLOps life cycle requires the use of MLOps technologies and frameworks including TensorFlow Extended (TFX), Kubeflow, Apache Airflow, AWS SageMaker, and Google Cloud AI Platform. These technologies offer a centralised platform for creating, deploying, and maintaining machine learning models. They also have a number of features, including automated model training, model serving, and monitoring.

The interview approach is frequently used to collect data since it enables the researcher to learn more about the topic under study in-depth. It also enables the research process to pursue further information and pose follow-up queries. Structured (using a pre-defined questionnaire) or unstructured (allowing for a more open-ended dialogue) interviews can be carried out in person or over the phone. The adaptable approach may be modified to meet particular research demands and objectives. Interviews can also be used to collect information from a small group of participants, making them appropriate for exploratory or qualitative investigations. To specifically select experts in the ML area, a purposive or strategic sample method was adopted. To make sure that the participants picked are the best matches for the research topic and can give the most pertinent information, deliberate sampling is frequently employed in qualitative research. Participants may be chosen by the research based on their background, level of experience, subject-matter knowledge, or point of view. Contrary to random sampling, purposeful sampling includes choosing individuals based on predetermined traits or standards that are pertinent to the study topic or hypothesis. In this study, three semi-structured interviews were conducted. The researcher met a group of experts in a retail firm for the first interview on February 15, 2023. This investigation was greatly aided by the eight employees of the organisation who were working on the MLOps project. The team was made up of software developers, ML engineers, MLOps engineers, and AI architects. An AI research scientist was interviewed for the second time on February 23, 2023. On February 27, 2023, a senior data consultant was the subject of the third interview. Research technique called as a semi-structured interview combines the adaptability of an unstructured interview with the framework of a structured interview. In a semi-structured interview, the researcher has a list of predefined questions. However, the interview also enables follow-up queries and inquiries to dive more into the comments of participants. Semi-structured interviews are frequently used in qualitative research to gather data about participants’ experiences, viewpoints, and attitudes. They preserve some structure and consistency throughout interviews while allowing for a more in-depth study of the participant’s opinions than a structured interview.

Ethical Considerations:

MLOps introduce a number of ethical issues that, like any technology, need to be taken into account in order to guarantee that the models are created, implemented, and used properly. The next section discusses some of the most important moral issues with MLOps.

Fairness and Prejudice:

The potential for bias and injustice in machine learning models is one of the most important ethical issues in MLOps. The training of models using biased data or the encoding of pre-existing biases in the data can lead to biased models. Particularly when it comes to financing, hiring, and criminal justice applications, bias can result in inaccurate or discriminatory outcomes. Teams from MLOps must work together to make sure that models are evaluated for bias prior to deployment in production and trained on a variety of representative data.

Privacy and Security: Security and privacy are important factors in MLOps. Sensitive data, including financial information, medical records, and personal information, is commonly used in machine learning models to generate predictions. MLOps teams are responsible for protecting both the models themselves from unauthorised access and modification and the data used to train and deploy the models.

Explainability and Transparency:

Understanding the reasoning behind a particular choice might be difficult due to the complexity and difficulty of machine learning models. Machine learning may come under suspicion as a result of this lack of openness. In order for stakeholders to understand how choices are made, MLOps teams must make sure that models are clear and simple to grasp. MLOps teams must also think about the moral application of machine learning models.

For instance, models employed in the criminal justice system need to be impartial and fair, but models used in the medical area ought to be utilised to help people rather than to damage or exploit them. Model development, deployment, operation, and usage must all be done ethically and for the intended purpose by MLOps teams. Continuous monitoring and improvement: To make sure that machine learning models operate as intended and that any biases or other ethical issues are addressed, MLOps teams must continually monitor and enhance these models. This can entail upgrading the algorithms, updating the data used to train the models, or altering the way the models are utilised in actual production. Machine learning operations teams must make sure that models are created, used, and maintained in an ethical and responsible manner. By taking into account the aforementioned factors, MLOps teams may contribute to ensuring that machine learning models benefit rather than damage society. Every step of this study’s execution was done in accordance with research ethics. No personal information would be asked, and all responses would be fully anonymous, according to the language on the questionnaire. Prior to the interview, participants were made aware that the interview would be recorded and, if required, quoted in the text. The participant was then informed of their rights, including the freedom to terminate the interview at any moment and the right to refuse to answer any uncomfortable questions. Additionally, they received information about how their names would be obscured and how their data would be handled and kept in compliance with GDPR guidelines.

Conclusion:

By providing systematic and automated administration of the whole machine learning process, from creation to deployment and maintenance, MLOps has revolutionised the field of machine learning engineering. By utilising the greatest software engineering and operations practises, MLOps has improved the maturity and dependability of machine learning development, making the process of creating, testing, and deploying models quicker and easier. By promoting improved communication between data scientists, machine learning engineers, software developers, and operations teams, MLOps is revolutionising the field of machine learning engineering. With clearly defined roles and responsibilities, these teams can work together easily using MLOps to develop and implement machine learning models that are suitable for the needs of the company. Additionally, MLOps makes it simpler to manage all phases of the workflow of ML, data pre-processing, model training, release, and monitoring. Using automated tools and procedures, MLOps has decreased the amount of human effort necessary, allowing teams to concentrate on more complex activities like feature engineering and model optimisation. By enhancing model repeatability and dependability, MLOps is also revolutionising machine learning engineering. MLOps combines continuous integration and deployment pipelines, automated testing, and version control to make sure that models are produced quickly and effectively, reducing the possibility of mistakes or problems. Additionally, MLOps is dealing with model deployment and maintenance problems. By using Docker and Kubernetes containerizations, MLOps makes it simpler to deploy models in a variety of contexts, from on-premises data centres to cloud-based platforms.